Machine Learning has been changing the world as we know it. With an abundance of data enabled by IoT devices, machine learning found its way even in niche applications. But running a machine learning algorithm requires a beefy workstation. There are machine learning frameworks that can be run on Single Board Computers (e.g. Raspberry Pi) but running on a microcontroller was out of the question, until recently. Introducing TinyML.

What is TinyML?

Tiny Machine Learning or TinyML is an emerging area of Machine Learning, where it tries to run various ML models on microcontrollers. Pete Warden, one of the key contributors to TinyML, was motivated to start this when he saw the OK Google team uses a 14KB Neural Network model on a DSP Microcontroller to listen for wake words “OK Google”. In the 32bit microcontroller market, 14KB is pretty insignificant.

TensorFlow is an end-to-end open-source machine learning platform. There is a TF version specifically for Mobiles and Edge Devices, TensorFlow Lite, and an experimental port of this framework for microcontrollers, TensorFlow Lite for Microcontrollers. From their site:

It doesn’t require operating system support, any standard C or C++ libraries, or dynamic memory allocation. The core runtime fits in 16 KB on an Arm Cortex M3, and with enough operators to run a speech keyword detection model, takes up a total of 22 KB.

I will show an example of how to run a TFLite model in STM32F746G. It is a High-performance DSP mcu with FPU. Based on ARM Cortex-M7 MCU with 1 Mbyte Flash. I will be using the 32F746GDISCOVERY development board that has an LCD screen and you can buy a camera module as an addon. The example is based on the example that is available on the TF GitHub.

Note: If the STM32 application becomes too much for you I would suggest you try one of their Arduino examples. Those are straight forward examples. I tried the SparkFun Edge example too. Not worth the hassle at the time of writing.

Why Rewrite the Example

Here is an excerpt from the TF GitHub:

The default reference implementations in TensorFlow Lite Micro are written to be portable and easy to understand. The advantage of this approach is that we can automatically pick specialized implementations based on the microcontroller, without having to manually edit build files. It allows incremental optimization from an always-working foundation, without cluttering the reference implementations with a lot of variants.

Using macros are hard to extend to multiple platforms. Using macros means that platform-specific code is scattered throughout files in a hard-to-find way and can make following the control flow difficult since you need to understand the macro state to trace it.

I get it. The team wants to support as many microcontrollers as they can. Any Embedded Engineers know, sometimes even two different series of microcontrollers from the same vendor are not the same, not to mention, all the other vendors out there with their variants. That is why the example is decoupled and abstracted so that you can easily port from one mcu to another.

- This is the first reason I wanted to rewrite the example. I am mostly interested in STM32 microcontrollers. I am heavily invested into their ecosystem. From IDE, Monitoring tool, CubeMx to their HAL libraries. I wanted to get away from all those abstractions and keep the example simple so that it is easier to follow.

The GitHub STM32 example uses Mbed. The compilation requires you to install Mbed CLI and Python 2.7. Also, if you are using Windows machine, good luck compiling it because Make fails because of the “path length” being too long.

- I am already familiar with the STM32 ecosystem, so it made sense to change the example to fit in this ecosystem, instead of exploring Mbed ecosystem.

- The last time I used Python 2.x was back in 2016. Also, as of January 1, 2020, Python 2 is no longer supported by its creators, the Python Software Foundation.

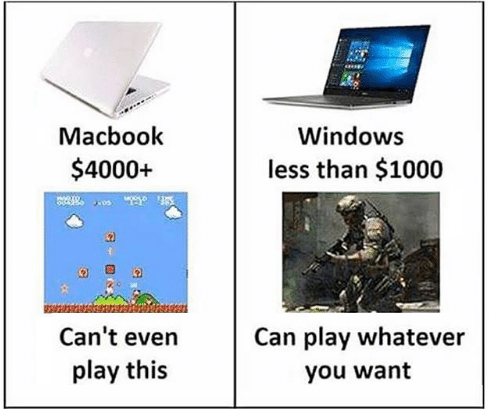

- And, I am a Windows fan. You know why (wink wink).

By the end of the tutorial, I will provide a zipped STM32 project files that you can download, extract, and open using STM32CubeIDE. You can build and deploy using just one button from the IDE. Sounds simple enough? Let’s get started.

Note: The TFLite Micro is still new (at the time of writing) and the library is changing almost daily basis. What I am about to show you might not work tomorrow. So, it will be a good idea to check TFLite Micro GitHub repo or their website for updated info.

Note: I will assume you are familiar with Python and used STM32CubeIDE before.